Sitemap

Areas of Interest

New to the website? Don’t quite know which specific career path you want to begin exploring? Start with selecting an Area of Interest. Our resources are categorized under Area of Interest, and then further segmented into individual Program Type categories representing specific career and degree paths.

Program Categories

Program Categories represent popular career & degree paths. Here, you can explore content tailored for the exploration of various degrees and careers. There are around 90 different categories to explore; to save you time, here’s a small collection of the most popular Program Categories, presented in no particular order and with a preview of content you’ll find within the category.

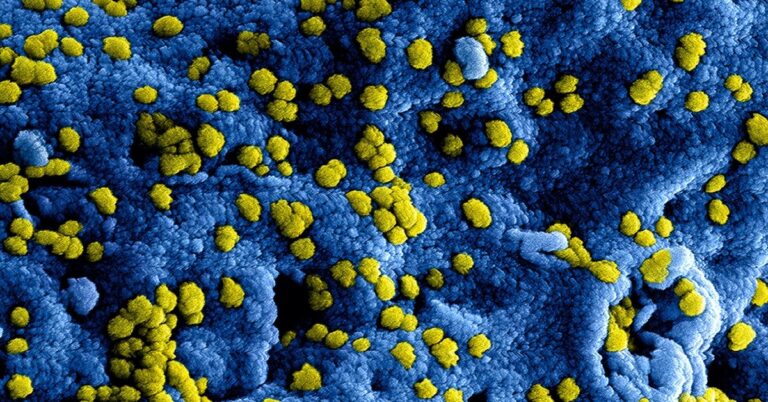

Computer Science

Business Administration

Special Guide: “Resource Guide for Prospective MBA Students“